My research focuses on learning unified representations for long videos, leveraging vision–language models, multimodal inputs (e.g., RGB, language, 3D poses and audio), and both egocentric and exocentric viewpoints. I’m also interested in vision–language–action models for robot learning and in diffusion models for video generation.

|

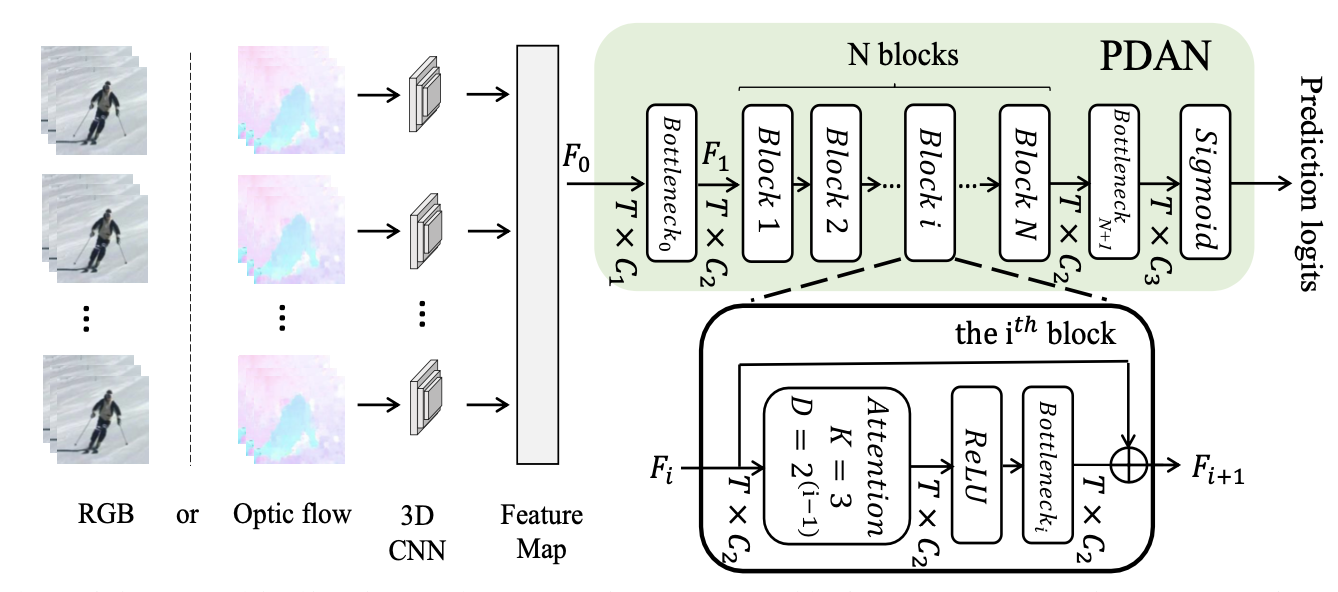

I am an Assistant Professor in the Department of Computer Science at the University of North Carolina at Charlotte. At UNC Charlotte, I am working on Video Representation Learning, and Robotic Vision. I am a member of the AI4Health Center and one of the founding members of the Charlotte Machine Learning Lab (CharMLab) at UNC Charlotte. Before this, I was a Postdoctoral Associate at Stony Brook University under the supervision of Michael S. Ryoo. In 2020, I completed my Ph.D. in Computer Science at INRIA, Sophia Antipolis, France under the supervision of Francois Bremond and Monique Thonnat. My Ph.D. thesis is on ¨Spatio-temporal attention mechanisms for Action Recognition¨ and click here to watch my Defense Presentation. I did my Post-Grad in Computer Science from the National Institute of Technology (NIT), Rourkela. |

|

2025

2024

2023

2022

Lab members

- Dominick Reilly

- Arkaprava Sinha

- Manish Kumar Govind (co-supervised with Prof. Pu Wang)

- Weston Bondurant (co-supervised with Prof. Stephanie Schuckers)

- Wenhao Chi

- Nitin Chandrasekhar (UG student at UNC Charlotte)

|

|

|

|

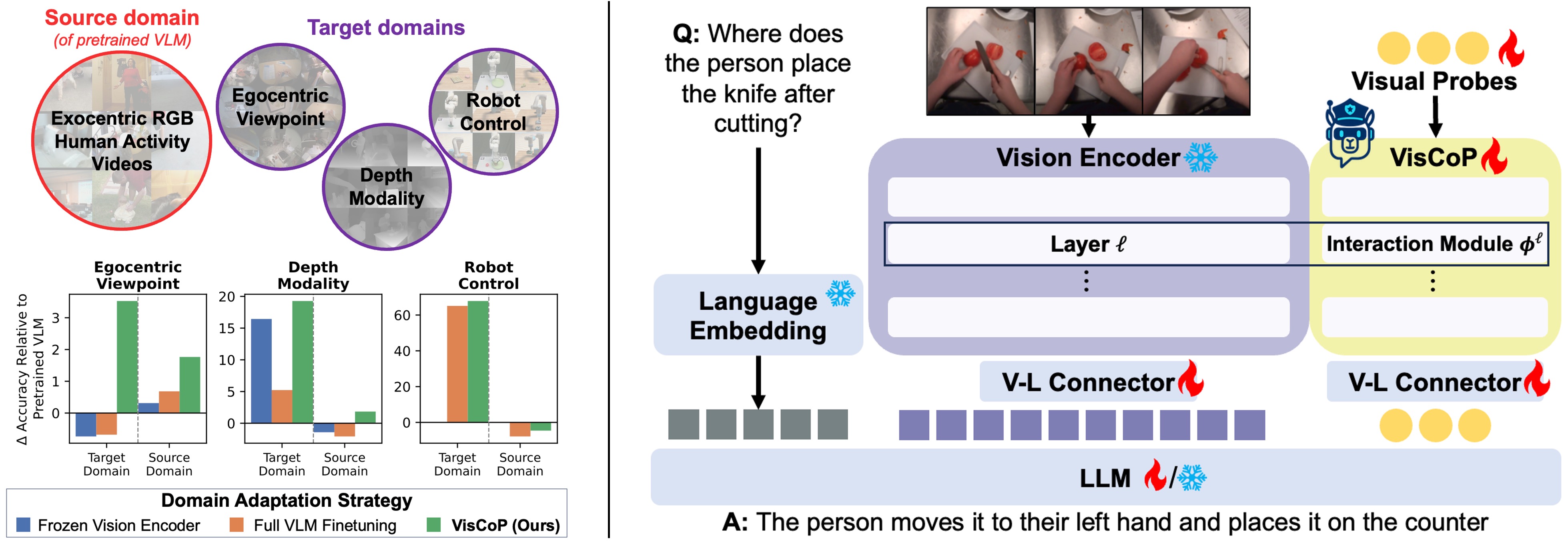

Dominick Reilly, Manish Kumar Govind, Le Xue, and Srijan Das. Preprint arXiv / Code We introduce Vision Contextualized Probing (VisCoP), which augments the VLM's vision encoder with a compact set of learnable visual probes that enables efficient domain-specific adaptation with minimal modification to pretrained parameters. |

|

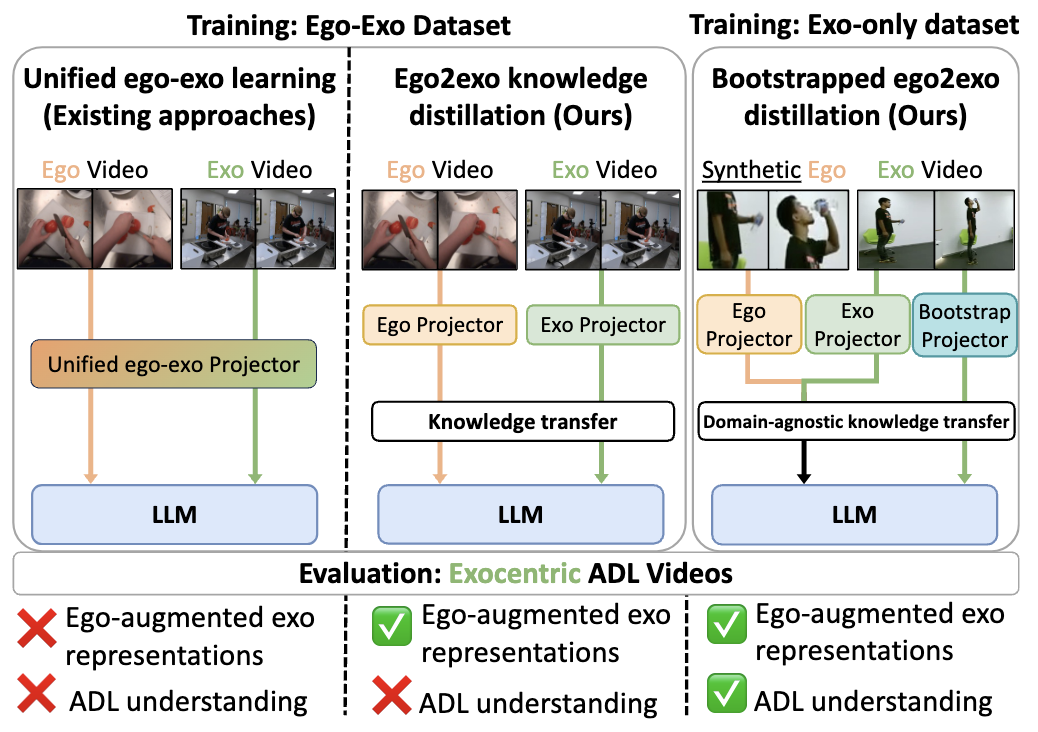

Dominick Reilly, Manish Kumar Govind, Le Xue, and Srijan Das. Preprint arXiv / Code We leverage the complementary nature of egocentric views to enhance LVLM’s understanding of exocentric ADL videos through online ego2exo distillation. |

|

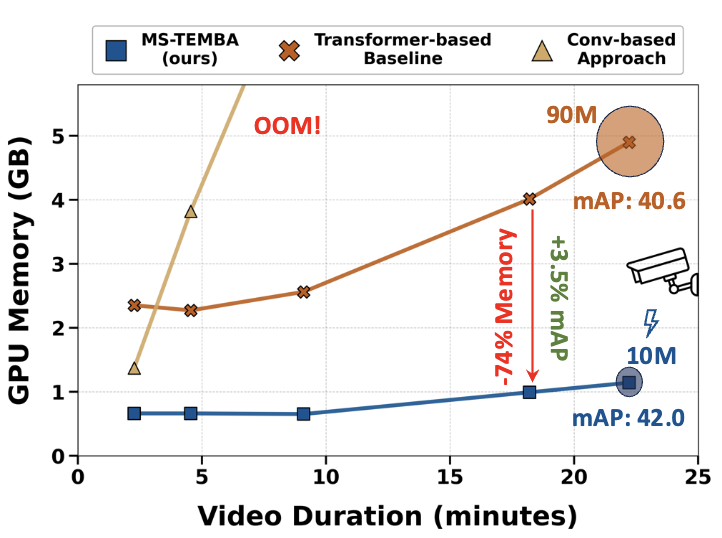

Arkaprava Sinha, Monish Soundar Raj, Pu Wang, Ahmed Helmy, and Srijan Das. Preprint arXiv / code MS-Temba is the first Mamba based architecture for action detection in long untrimmed videos that can be trained/tested on NVIDIA Jetson Nano. |

|

|

Saarthak Kapse, Pushpak Pati, Srikar Yellapragada, Srijan Das , Rajarsi R. Gupta, Joel Saltz, Dimitris Samaras, Prateek Prasanna. To Appear in ICCV 2025 arXiv / Code Gigapixel Vision-Concept Knowledge Contrastive pretraining (GECKO) aligns WSIs with a Concept Prior for delivering clinically meaningful interpretability. |

|

Muhammad Usama Saleem, Ekkasit Pinyoanuntapong, Mayur Jagdishbhai Patel, Hongfei Xue, Ahmed Helmy, Srijan Das, Pu Wang. To Appear in ICCV 2025 arXiv / Website A novel generative masked model for hand mesh recovery that synthesizes plausible 3D hand meshes. |

|

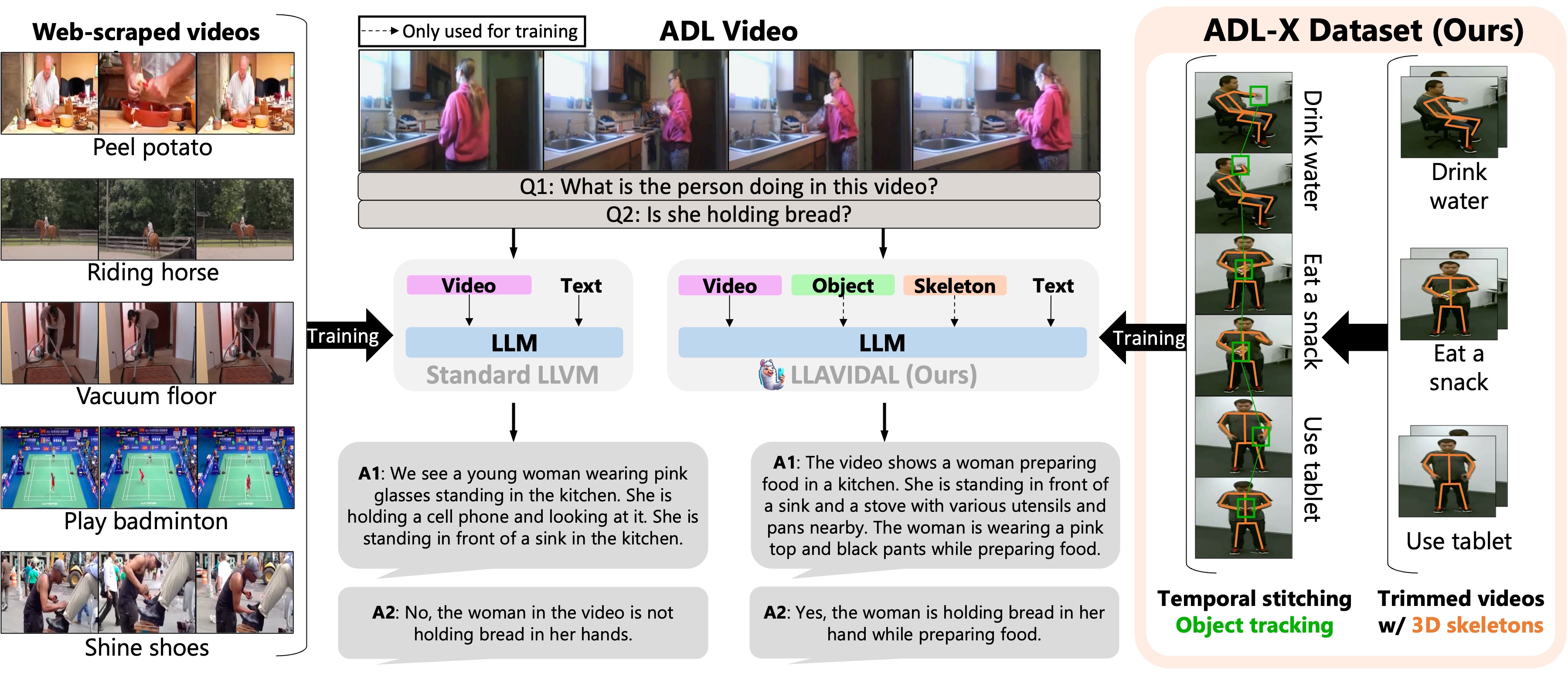

Dominick Reilly, Rajatsubhra Chakraborty, Arkaprava Sinha, Manish Kumar Govind, Pu Wang, Francois Bremond, Le Xue, Srijan Das. CVPR 2025 arXiv / website / code LLAVIDAL, a Large Language Vision Model, incorporates 3D poses and relevant object trajectories to understand the intricate spatiotemporal relationships within ADLs. |

|

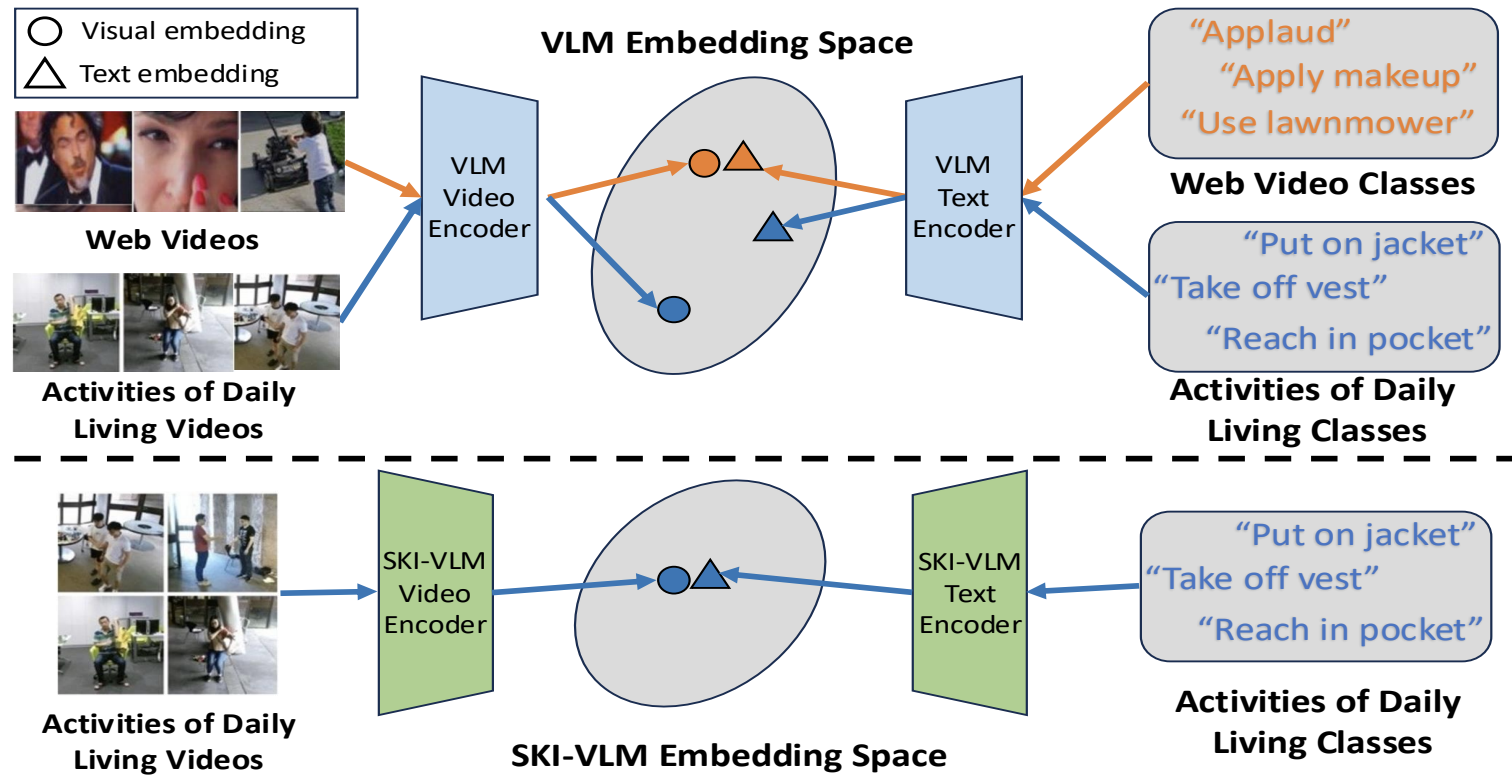

Arkaprava Sinha, Dominick Reilly, Francois Bremond, Pu Wang, and Srijan Das. AAAI 2025 arXiv / Code Ski-models introduce 3D skeletons into the vision-language embedding space to enable effective zeroshot learning for ADL. |

|

Muhammad Usama Saleem , Ekkasit Pinyoanuntapong, Pu Wang, Hongfei Xue, Srijan Das, Chen Chen. AAAI 2025 arXiv / Website A generative framework that reformulates monocular HMR as an image-conditioned generative task. |

|

|

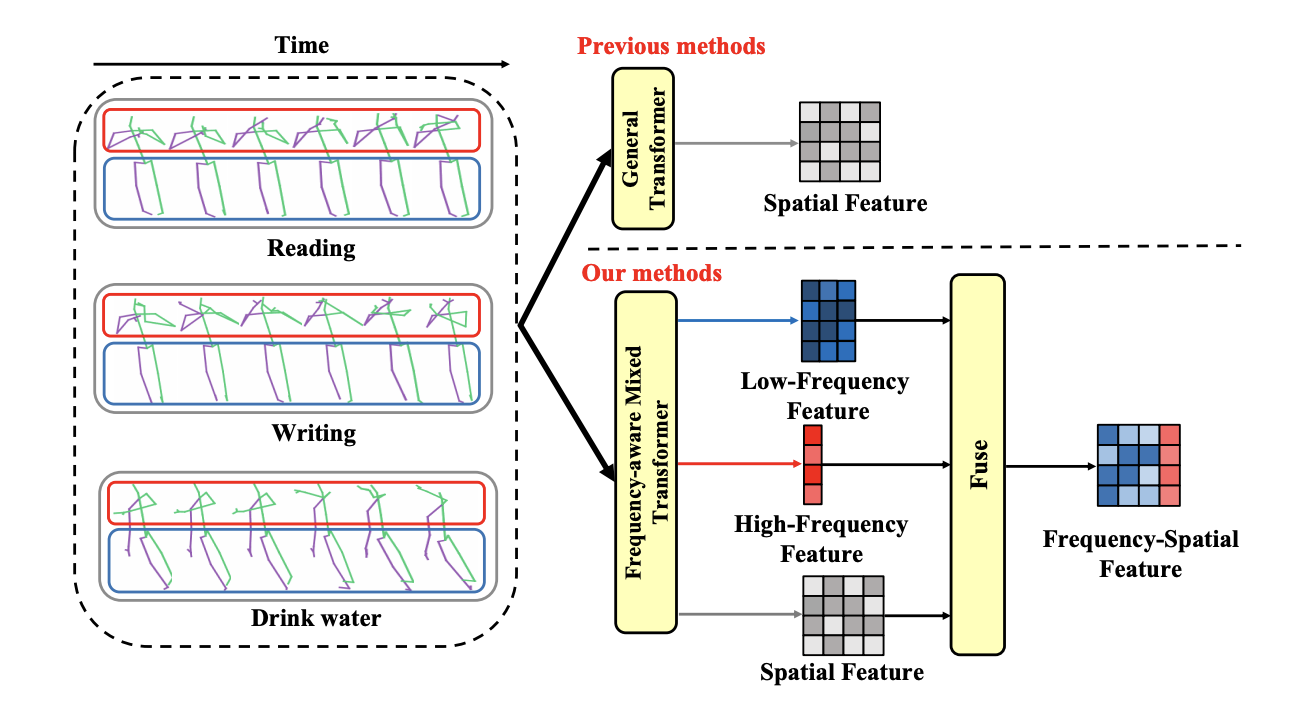

Wenhan Wu, Ce Zheng, Zihao Yang, Chen Chen, Srijan Das, Aidong Lu. ACM MM 2024 arXiv / code A frequency-aware attention module to unweave skeleton frequency representations for action recognition. |

|

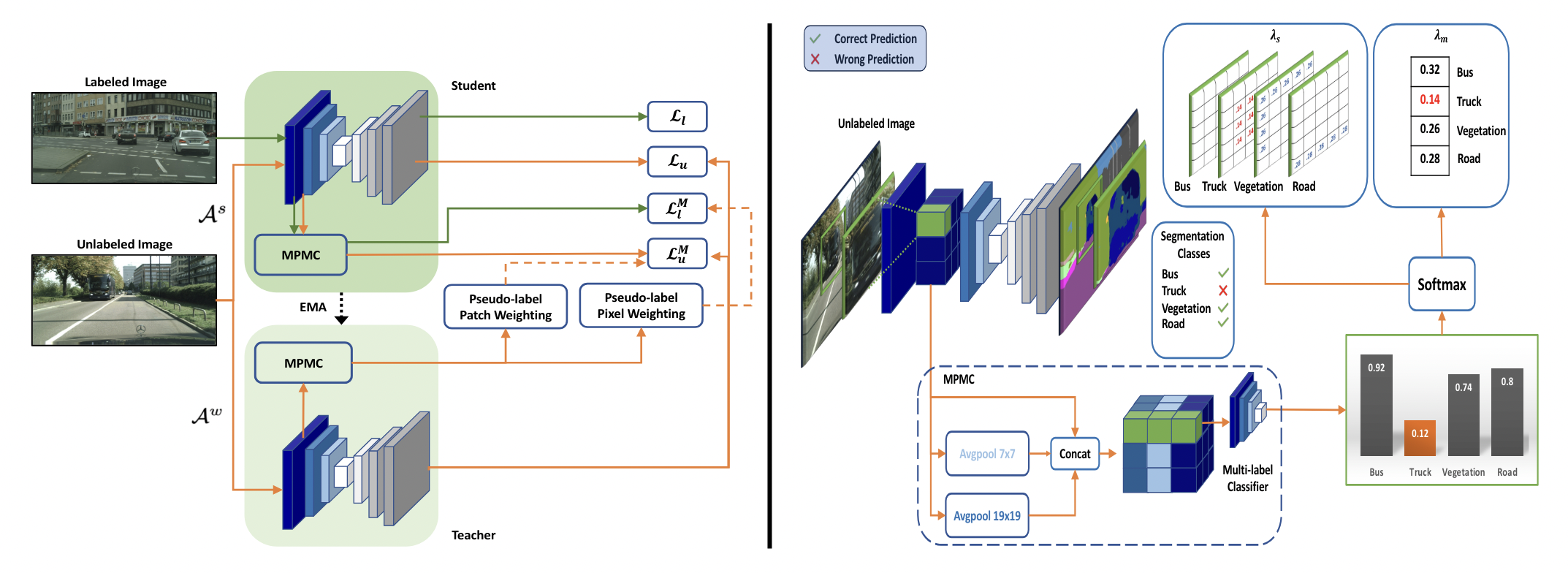

Prantik Howlader, Srijan Das, Hieu Le, Dimitris Samaras. ECCV 2024 arXiv / code A novel plug-in module designed for existing semi-supervised segmentation frameworks that offers patch-level supervision. |

|

Ekkasit Pinyoanuntapong, Muhammad Usama Saleem, Pu Wang, Minwoo Lee, Srijan Das, Chen Chen. ECCV 2024 arXiv / website / code A novel text-to-motion generation framework. BAMM captures rich and bidirectional dependencies among motion tokens. |

|

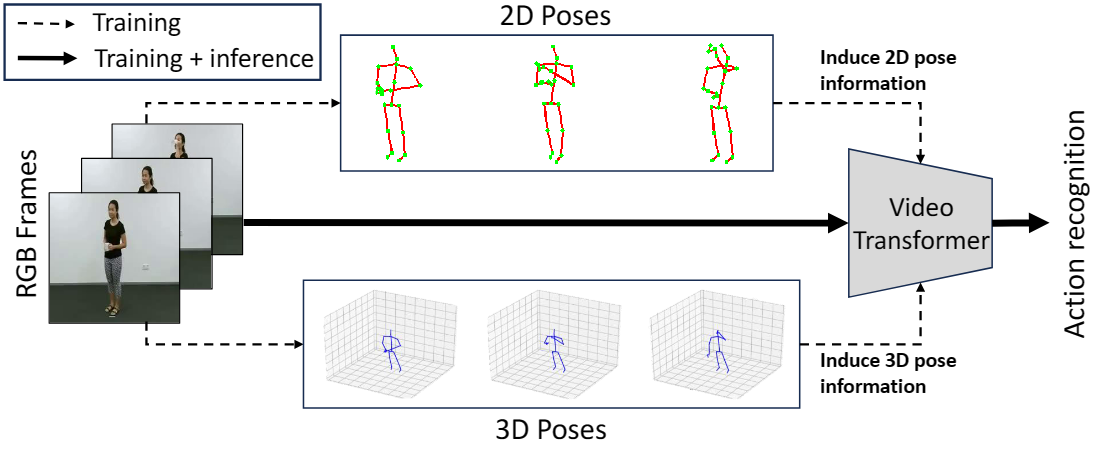

Dominick Reilly and Srijan Das. CVPR 2024 arXiv / code We introduce the first Pose Induced Video Transformer: PI-ViT (or π-ViT), a novel approach that augments the RGB representations learned by video transformers with 2D and 3D pose information. |

|

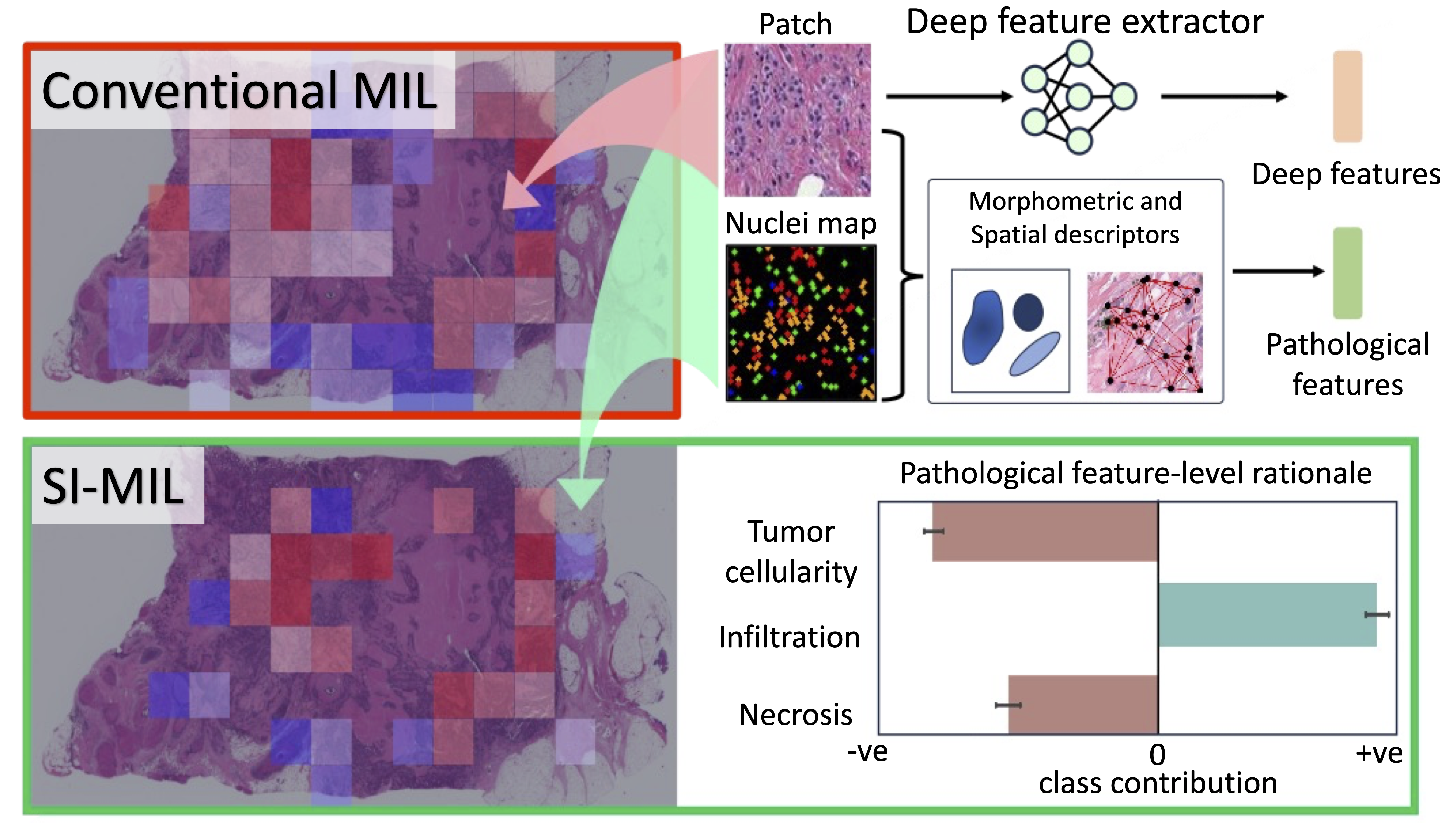

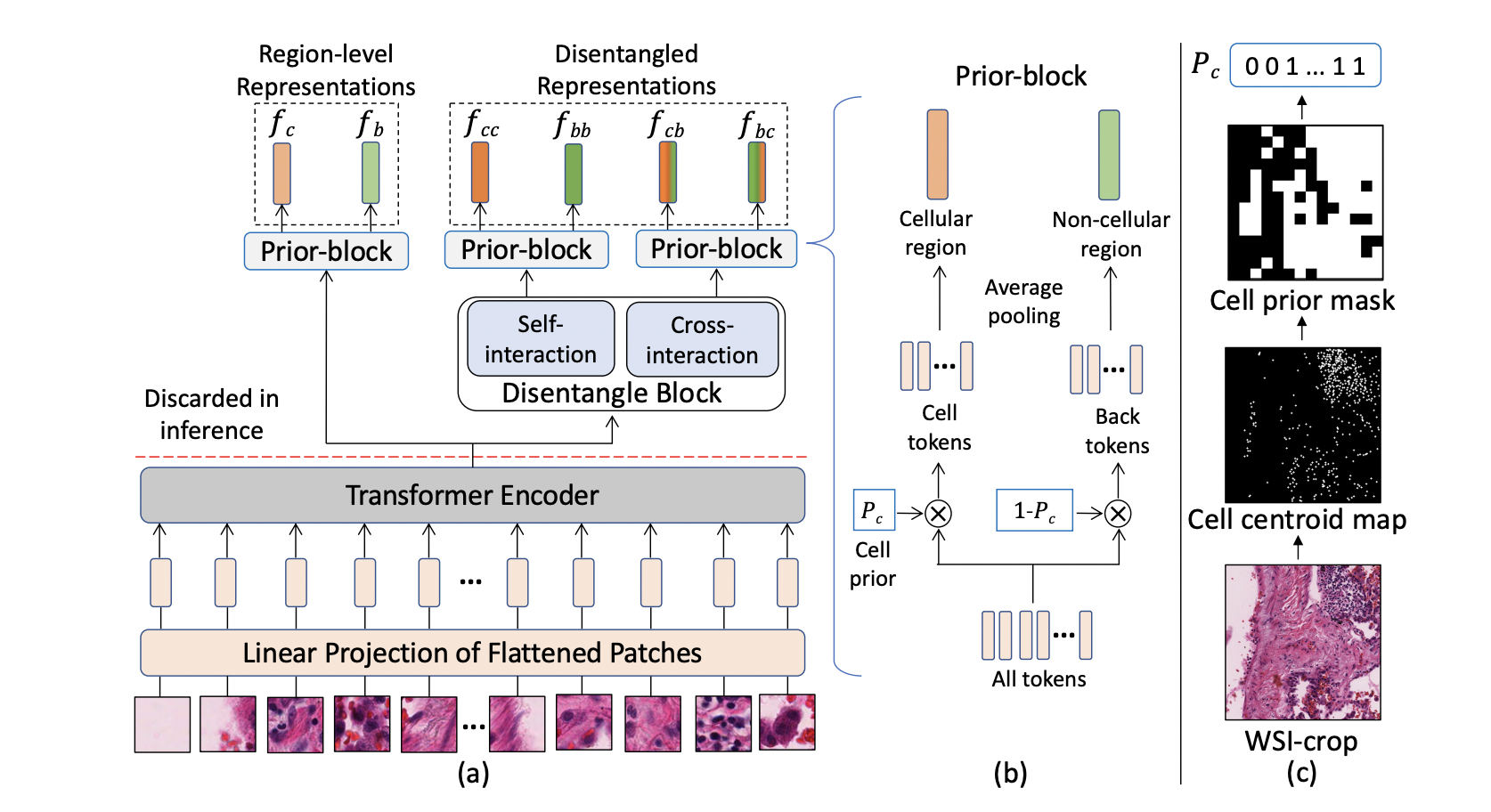

Saarthak Kapse*, Pushpak Pati*, Srijan Das, Jingwei Zhang, Chao Chen, Maria Vakalopoulou, Joel Saltz, Dimitris Samaras, Rajarsi Gupta, Prateek Prasanna. CVPR 2024 arXiv / code Self-Interpretable MIL (SI-MIL), the first interpretable-by-design MIL method for gigapixel WSIs, which provides de novo feature-level interpretations grounded on pathological insights for a WSI. |

|

Aritra Dutta, Srijan Das , Jacob Nielsen, Rajatsubhra Chakraborty, Mubarak Shah. CVPR 2024 arXiv / Website We present MAVREC, a video dataset where we record synchronized scenes from different perspectives -- ground camera and drone-mounted camera. |

|

Saarthak Kapse, Srijan Das, Jingwei Zhang, Rajarsi R. Gupta, Joel Saltz, Dimitris Samaras, Prateek Prasanna. Medical Image Analysis (IF 10.9) arXiv A diversity-inducing pretraining technique, tailored to enhance representation learning in digital pathology. |

|

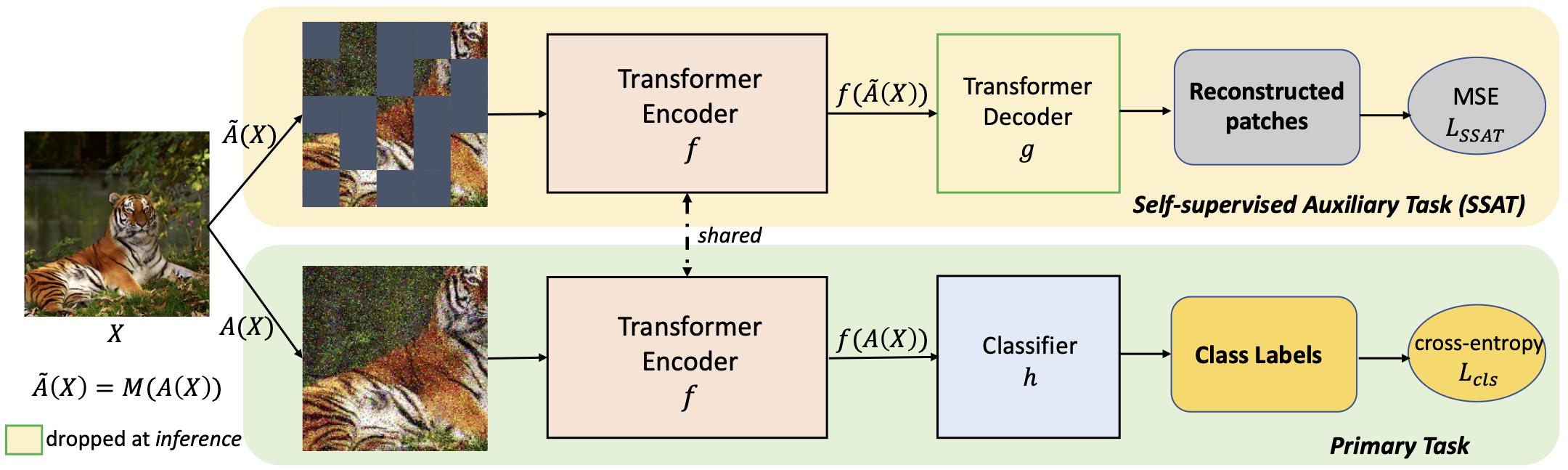

Srijan Das, Tanmay Jain, Dominick Reilly, Pranav Balaji, Soumyajit Karmakar, Shyam Marjit, Xiang Li, Abhijit Das, and Michael S. Ryoo. WACV 2024 arXiv / code / Poster / Video This paper shows that jointly optimizing ViTs for the primary task and a Self-Supervised Auxiliary Task is surprisingly beneficial when the amount of training data is limited. |

|

|

|

|

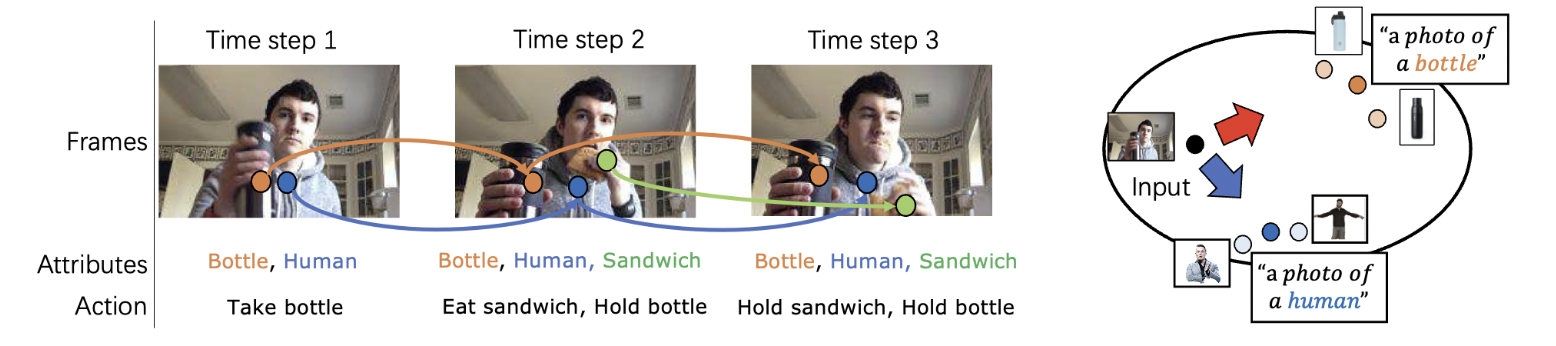

Rui Dai, Srijan Das, Michael S. Ryoo, Francois Bremond. BMVC 2023 arXiv / video This paper explains how to utilize OpenAI's CLIP for long-term action detection in videos. |

|

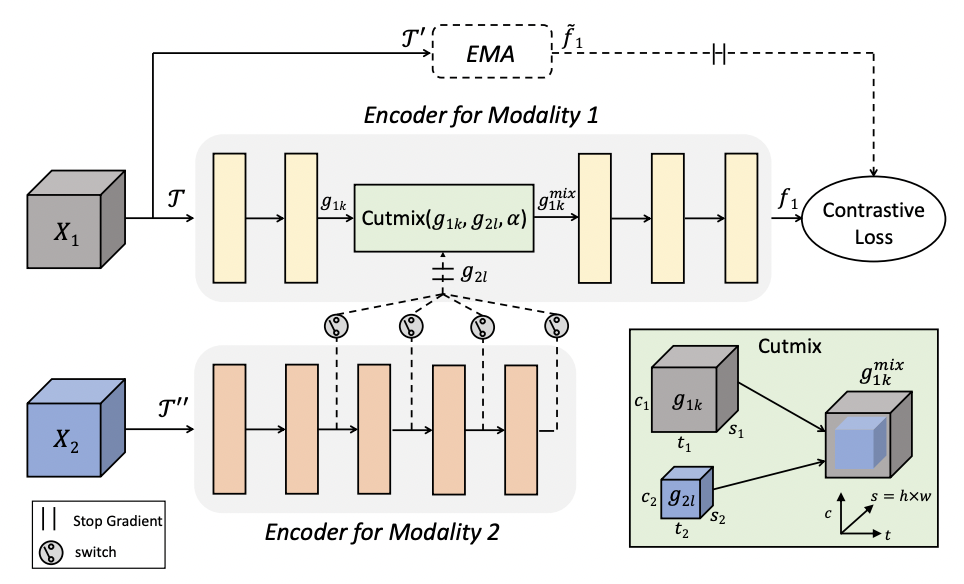

Srijan Das and Michael S. Ryoo. 18th International Conference on Machine Vision Applications , July 2023 arXiv / Poster / Best Poster Award This paper focuses on designing video augmentation for self-supervised learning, we propose CMMC to make use of other modalities in videos for data mixing. |

|

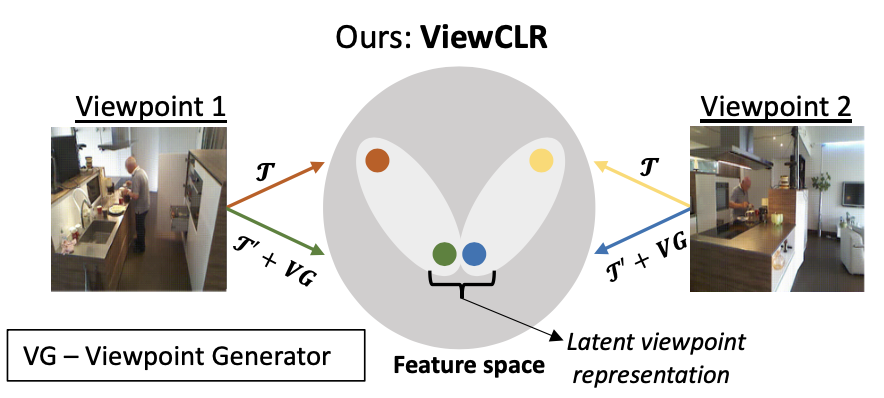

Srijan Das, and Michael S. Ryoo. WACV 2023 arXiv A framework for learning self-supervised video representation that is invariant to unseen camera viewpoints. |

|

|

|

|

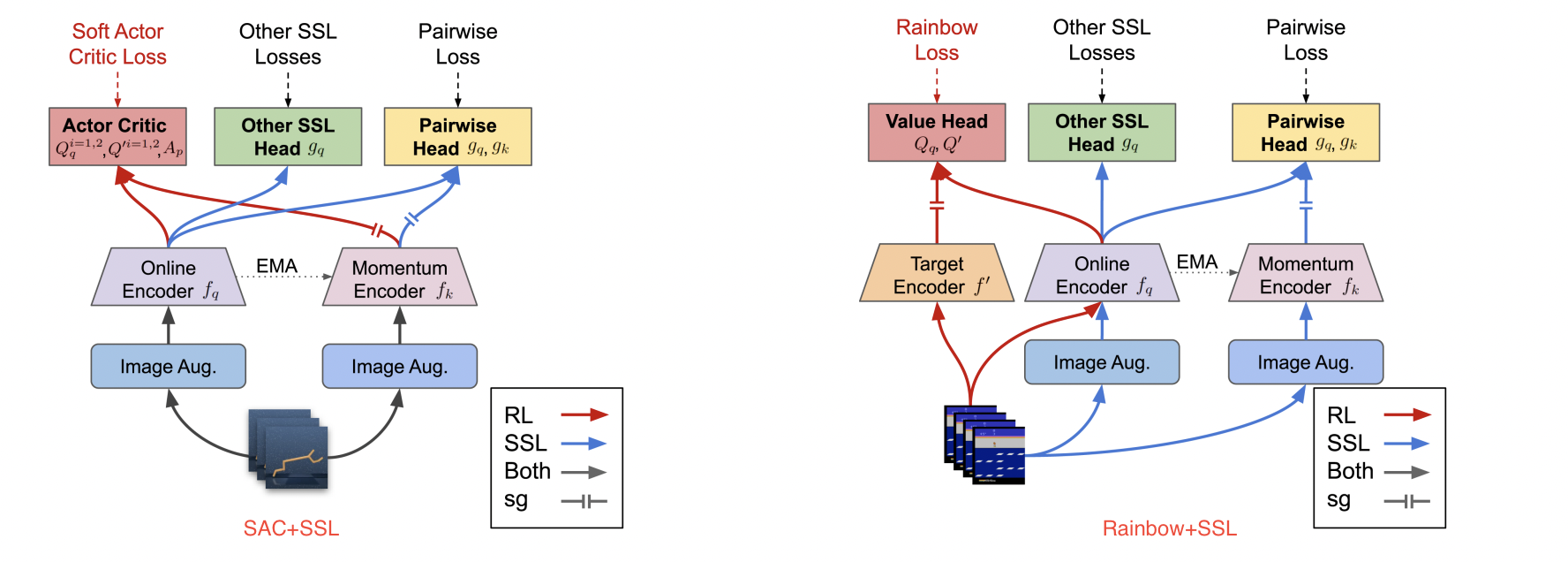

Xiang Li, Jinghuan Shang, Srijan Das, Michael S. Ryoo. NeurIPS 2022 arXiv / code The impacts of the existing self-supervised losses with Joint Learning framework for RL is limited, while there is no golden method that can dominate all tasks. |

|

Jinghuan Shang, Srijan Das, Michael S. Ryoo. NeurIPS 2022 arXiv / Project Page / code 3DTRL is a light-weighted, plug-and play layer that recovers 3D information of visual tokens and leverages it for learning viewpoint-agnostic representations. |

|

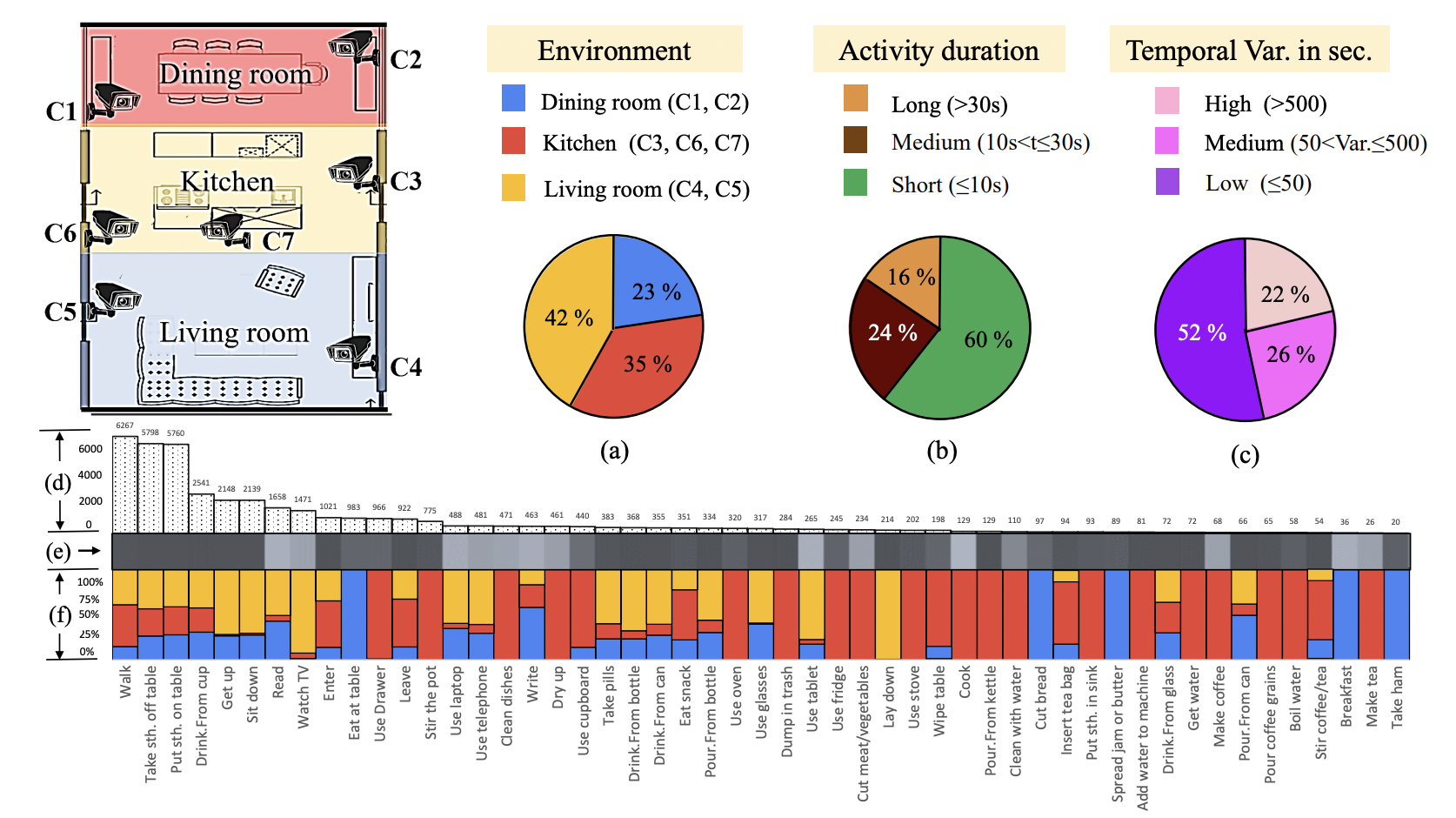

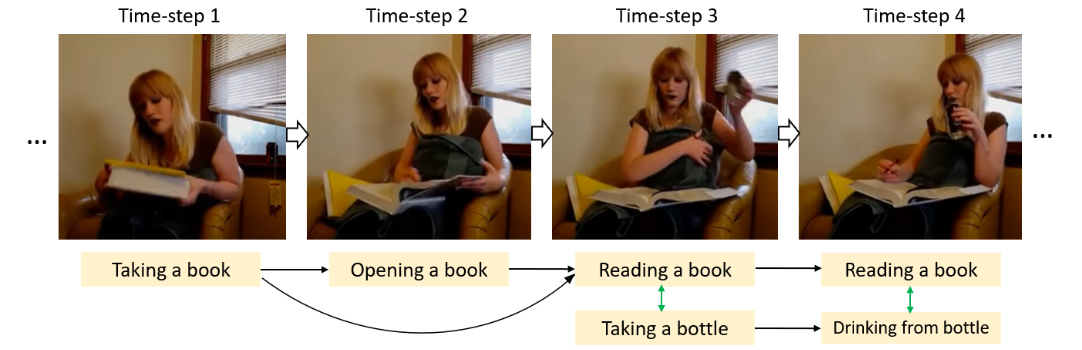

Rui Dai, Srijan Das, Saurav Sharma, Luca Minciullo, Lorenzo Garattoni, François Brémond, Gianpiero Francesca. T-PAMI 2022 Project Link / Code TSU is a new untrimmed daily-living dataset consisting of 51 activities performed in a spontaneous manner, captured from non-optimal viewpoints. |

|

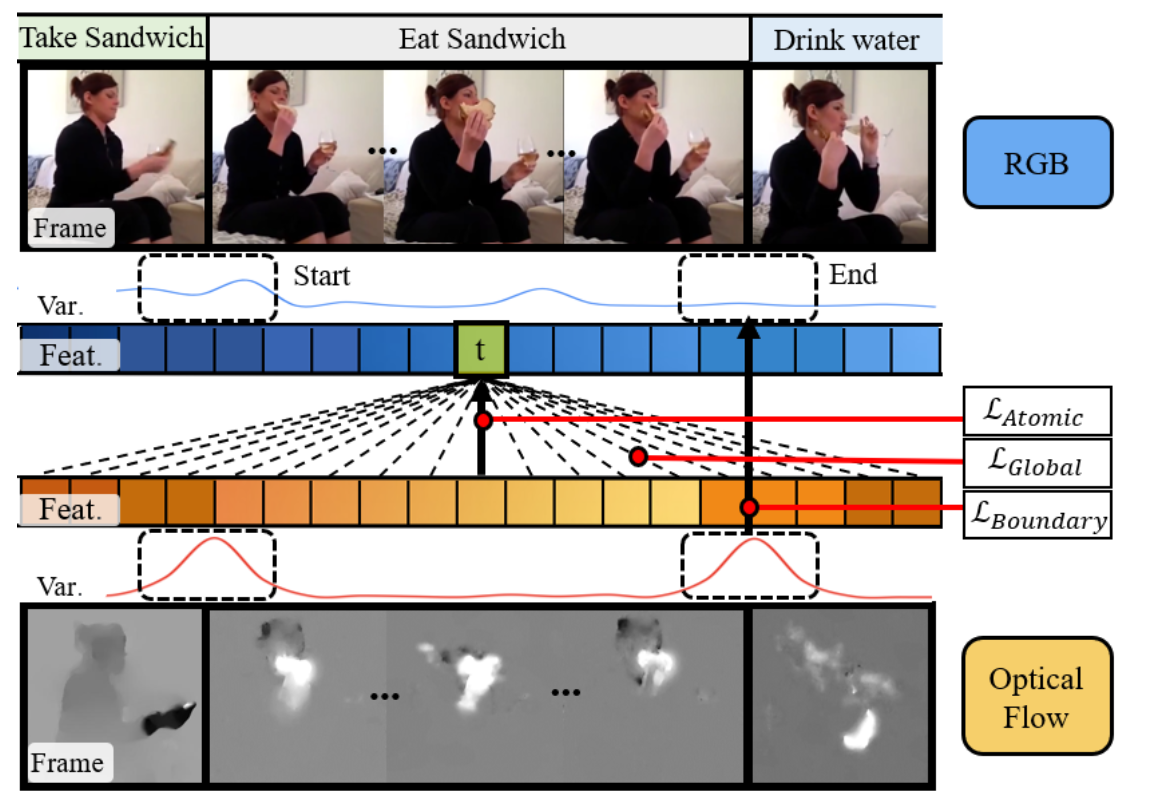

Rui Dai, Srijan Das, Kumara Kahatapitiya, Michael S. Ryoo, Francois Bremond. CVPR 2022 arXiv / code A ConvTransformer network that explores global and local temporal relations at multiple resolutions. |

|

|

|

|

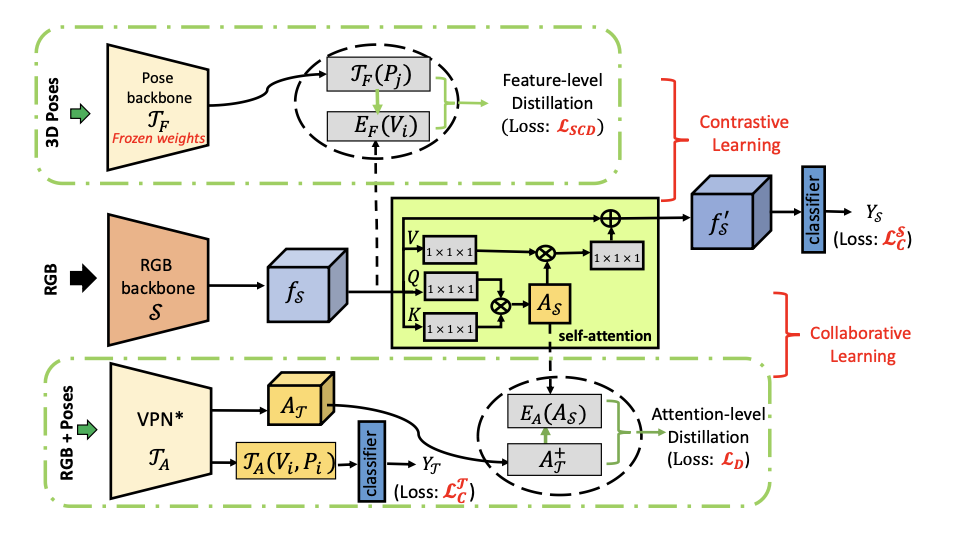

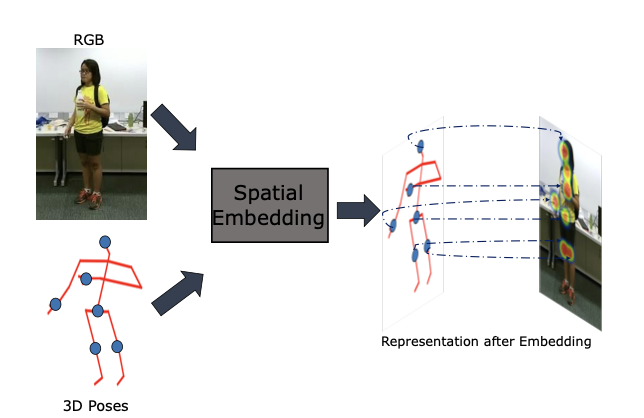

Srijan Das, Rui Dai, Di Yang, Francois Bremond, TPAMI, 2021 arXiv / code VPN++ is an extension of our VPN model (ECCV 2020). VPN++ hallucinates pose driven features while not requiring costly 3D Poses at inference. |

|

Rui Dai, Srijan Das, Francois Bremond. BMVC 2021, Oral |

|

Rui Dai, Srijan Das, Francois Bremond. ICCV 2021 |

|

Rui Dai, Srijan Das, Luca Minciullo, Lorenzo Garattoni, Gianpiero Francesca and Francois Bremond. WACV 2021 Code / Video / Poster |

|

|

|

|

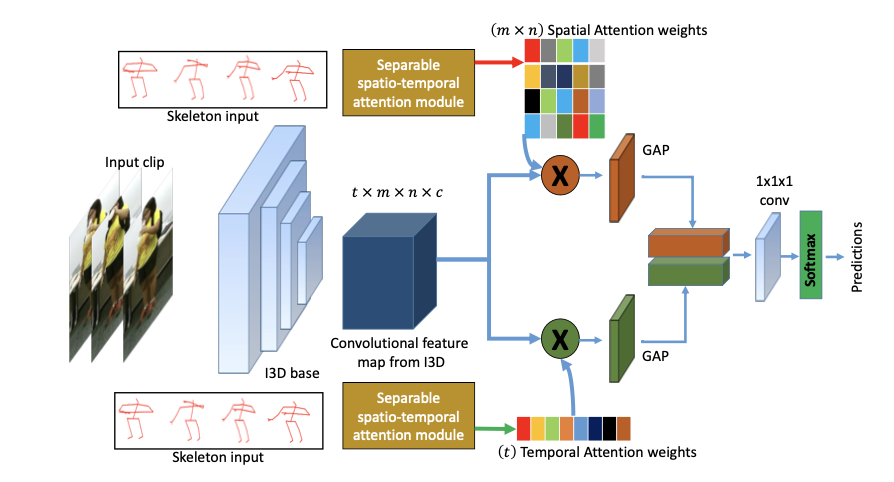

Srijan Das, Saurav Sharma, Rui Dai, Francois Bremond, Monique Thonnat. ECCV 2020 Code |

|

Srijan Das, Monique Tonnat and Francois Bremond. WACV 2020 |

|

|

|

|

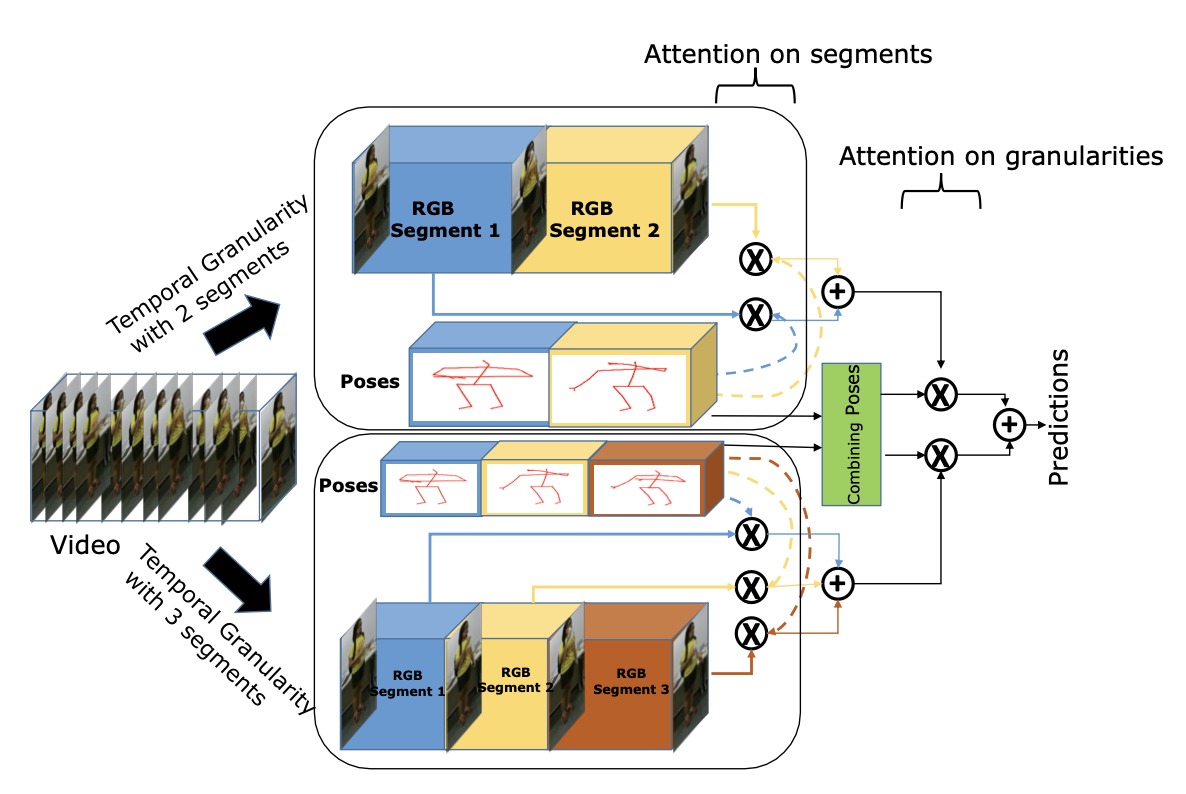

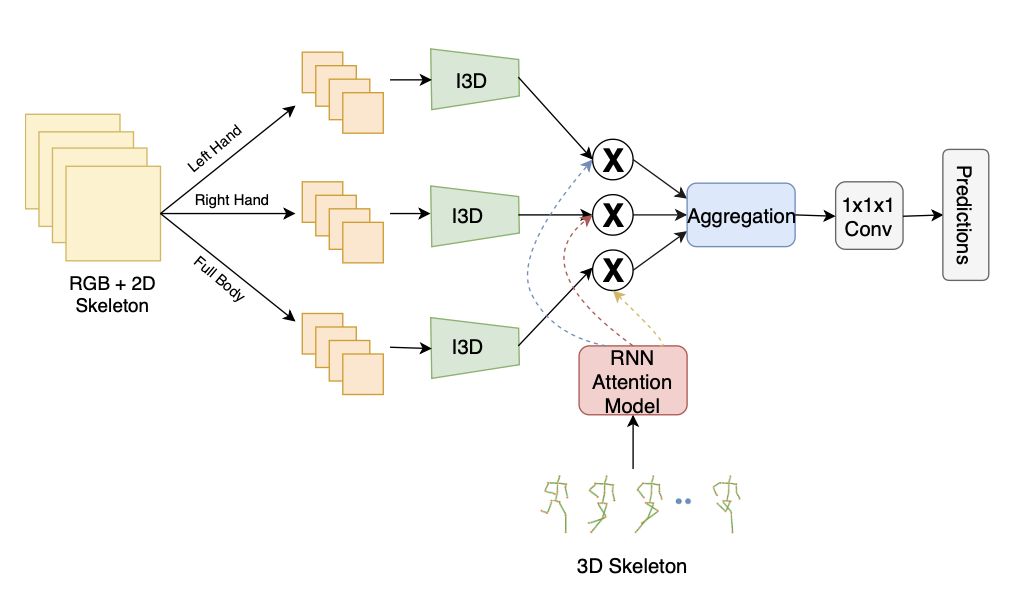

Srijan Das, Rui Dai, Michal Koperski, Luca Minciullo, Lorenzo Garattoni, Francois Bremond and Gianpiero Francesca. ICCV 2019 Project Link / Code |

|

Srijan Das, Arpit Chaudhary, Francois Bremond and Monique Thonnat. WACV 2019 |

|

|

|

|

|

|

|

|